Or else welcome the good ol' friend, the O(n^2) back in your life.

There are three common ways people add elements to vectors in R. First way:

This is bad. It does what it looks like: adds one element to the vector and then copies it to another variable, which just happens to be the same variable 'l'. This is clearly O(n^2).

The second method is this:

This approach looks decent because, coming from a Python and Java background, a good implementation would allow appending elements to vectors in amortized O(1) time. However, this holds an unpleasant surprize. Another good ol' O(n^2) in lurking in the shadows here.

The method f2 is arguably better than f1, but none of them is any match for f3 which simply uses pre-allocation:

Of course these are just cooked up examples, but it is not difficult to imagine situations where such incremental creation of list is inevitable (think reading from DB cursors). For this particular example, the fastest solution involves a sapply but is not much faster then f3:

So in most cases it'll be better to overestimate the number of items in a list and then truncating it to the right size than dynamically expanding it.

And don't forget to smile and wave at the hidden O(n^2)!

~

musically_ut

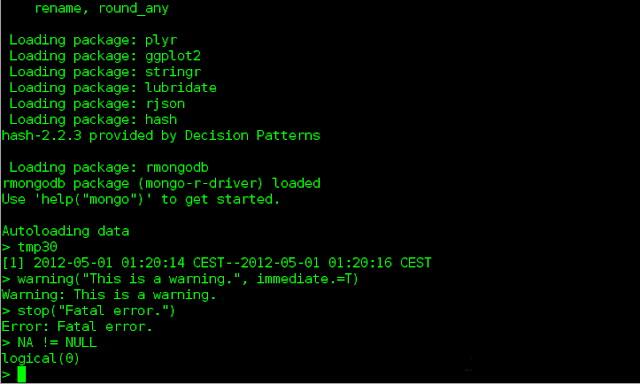

PS: If you want to run the profile yourself, here's the gist of the functions and the profiling code.

There are three common ways people add elements to vectors in R. First way:

f1 <- function (n) {

l <- list()

for(idx in 1:n) {

l <- append(l, idx)

}

return(l)

}

This is bad. It does what it looks like: adds one element to the vector and then copies it to another variable, which just happens to be the same variable 'l'. This is clearly O(n^2).

The second method is this:

f2 <- function (n) {

l <- list()

for(idx in 1:n) {

l[[length(l) + 1]] <- idx

}

return(l)

}

This approach looks decent because, coming from a Python and Java background, a good implementation would allow appending elements to vectors in amortized O(1) time. However, this holds an unpleasant surprize. Another good ol' O(n^2) in lurking in the shadows here.

|

| Time taken to create a vector of size n |

f3 <- function (n) {

l <- vector("list", n)

for(idx in 1:n) {

l[[idx]] <- idx

}

return(l)

}

Of course these are just cooked up examples, but it is not difficult to imagine situations where such incremental creation of list is inevitable (think reading from DB cursors). For this particular example, the fastest solution involves a sapply but is not much faster then f3:

f4 <- function (n) {

return(as.list(sapply(1:n, function (idx) idx)))

}

So in most cases it'll be better to overestimate the number of items in a list and then truncating it to the right size than dynamically expanding it.

And don't forget to smile and wave at the hidden O(n^2)!

~

musically_ut

PS: If you want to run the profile yourself, here's the gist of the functions and the profiling code.